Google made the right choice not diving into consumer AI bots

There was zero input from any sort of artificial intelligence involved in this article.

I feel like I might need to make that disclaimer with everything I write now because everywhere you look it seems that people are leaning on AI to write the words people used to write.

I get it — it's easy, and like everyone else, folks who write on the internet for a living also love anything that makes it easier. But it also sucks and makes for really horrible things to read filled with misinformation. To me, it's not worth the trade — been there, done that.

Interestingly, at least to me, Google has made the same decision about AI-produced articles. It might not have been for the right reason(s), but like a broken clock, Google gets it right every so often.

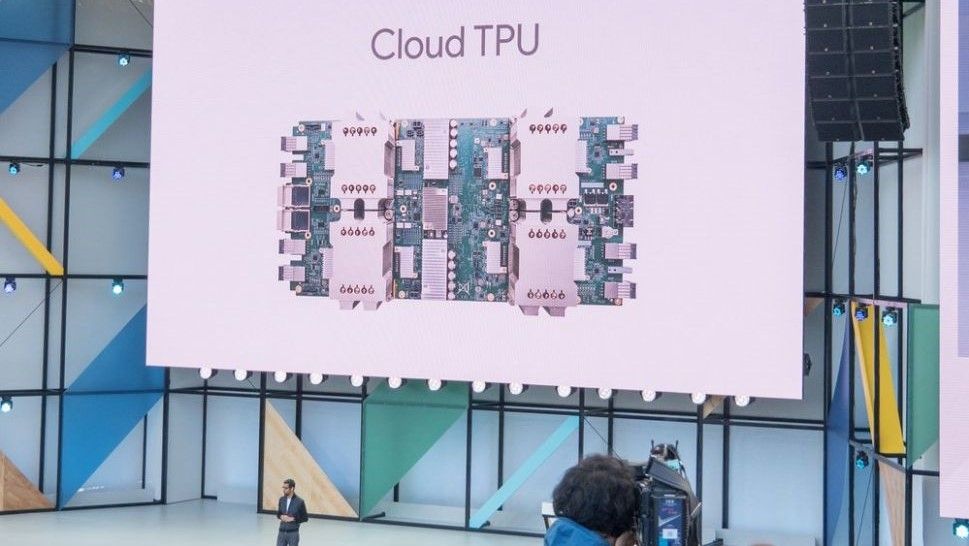

Google is one of the companies at the forefront when it comes to AI (artificial intelligence) and LLM (Large Language Model) research and has demoed some amazing tools. AI is used in many parts of your Android phone, as well as a slew of Google services but the company hasn't released any comprehensive consumer-focused AI interface that can write term papers or blog posts.

The official reason why according to Alphabet CEO Sundar Pichai and SVP of Google Research Jeff Dean sounds fishy: "the cost if something goes wrong would be greater because people have to trust the answers they get from Google."

Like almost everyone else I took that answer to be some sort of code that really means "we haven't found a way to monetize it and use it for search" and figured that Google was blowing smoke. Either way, it's beginning to look like the company made the right choice regardless of the reasons.

AI does what it is taught and nothing more

AI is neither artificial nor intelligent. It's software that retains what it has been fed and is programmed how to present it. It can (almost) drive a car, it can identify a cat, and it can write essay-length content people try to pass off as their own.

Some examples of AI being dangerous also exist, such as suggesting that wood chips make cereal taste better or that crushed porcelain adds calcium and essential nutrients when added to baby formula. I was turned off when AI clearly plagiarized human authors and disregarded any sense of journalistic ethics, but this is insane.

We trust Google to tell us where to find what we're looking for and not give us bad advice. We probably shouldn't, but we do.

Imagine if any of that had come from Google. We lose our collective minds when the camera app takes three seconds to load, and that's before we've eaten any wood chips that would probably make us even more cranky.

Again — imagine if Google had given wrong information using mistakes in basic math wrapped as a financial advice article. That would have ended with Pichai dragging a wooden cross up a hillside if the people had their way. We hate Google, but we also trust Google to tell us where to find what we're looking for and not give us bad advice. We probably shouldn't, but we do.

There is no going back

None of this changes the fact that AI is quickly replacing the outsourcing and freelance writing industry. That's unfortunate, but it's also partially Google's fault.

In case you don't know already, finding ways to put buzzwords in a title that drives it near the top of Google search results is an entire industry. Every internet content-creating business does it, including Android Central and my favorite YouTuber who has started to call all the broken-down cars he fixes "abandoned" and "forgotten". Those words move the video toward the top of the search results, just like "best" moves a written article higher in search. Those are the words we use to search for "stuff" so that's what we get.

AI is really good at SEO

It's called Search Engine Optimization and AI is really good at it. The actual content of an article doesn't matter because once you're there and have seen the ads the website can count it as a hit, just as if you spent 10 minutes reading worthwhile content.

You've noticed Google (and Bing, and Jeeves or whatever other search engine you use) results getting worse because of SEO. AI will make this even worse. It doesn't have to be this way.

Words written by an AI are simple to determine. You can try the demo of this tool by Edward Tian to learn more about the methodology used, but if one person can write an accurate tool to root out AI-written content in just a weekend, Google can filter them out of search results. Yet it doesn't.

There is probably a reasonable explanation why, but we don't know it and frankly, I don't care what it is. AI-written articles are just bad and I would love to never be linked to one by a search engine.

Google will probably jump on the consumer bot AI train eventually, maybe even in 2023. It's not going to be pretty because chances are Google has a far more advanced conversational AI than anything we've seen so far. It's going to need to be heavily censored and constantly tweaked so it doesn't turn into something that makes Microsoft Tay look tame and comforting.

Then it will have a reason to not filter AI out of search results and our lives forever. 💰